Today we need to examine something that reveals the machinery of authoritarian propaganda with surgical precision: how a handful of violent incidents across a few city blocks in Los Angeles was transformed into a “crisis” justifying the deployment of federal troops against American citizens. And how easily that transformation succeeded.

I write this from Los Angeles, where I’ve lived long enough to witness actual citywide chaos. I was here during the widespread unrest following George Floyd’s murder. I was here during the rioting after the Dodgers won the World Series. I’ve seen what it looks like when a city of four million people truly erupts in violence.

What happened this past weekend wasn’t that. Not even close.

Yet somehow, a few isolated incidents of vandalism and confrontation—contained within a handful of city blocks—became the justification for deploying Marines against American protesters. More disturbing still, this manufactured crisis worked exactly as intended. Millions of Americans now believe that military force was not just justified but necessary to restore order in a city that was never actually in disorder.

Two plus two equals four. There are twenty-four hours in a day. And what we witnessed was not urban chaos but the deliberate manufacture of consent for military rule through carefully orchestrated propaganda.

The anatomy of this deception reveals the terrifying efficiency of post-truth manipulation. It begins with the amplification of isolated incidents into the appearance of widespread mayhem. A broken window here, a confrontation there, perhaps a small fire somewhere else—all genuine incidents, but scattered across a metropolitan area larger than many states. In the hands of propagandists, these become “Los Angeles in flames” or “chaos consuming the city.”

This isn’t to minimize the genuine violence that occurred. Videos of protesters hurling chunks of concrete torn from infrastructure at police officers are genuinely shocking. Such attacks represent serious criminal behavior that deserves prosecution. But here’s what the propaganda deliberately obscures: the number of people engaging in this violence could be counted on two hands. The geographic area where these incidents occurred spans perhaps a few city blocks in a metropolitan area of over 500 square miles.

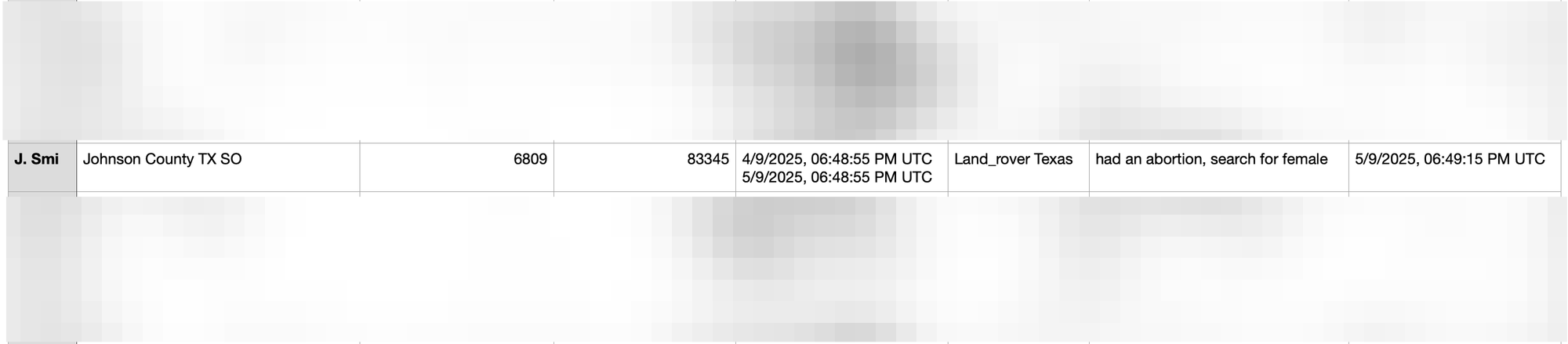

Most importantly, the system worked exactly as it’s supposed to. Many of these individuals have already been apprehended. Social media footage is being used to identify and arrest those who haven’t been caught yet. Local law enforcement, supported by existing legal frameworks, is handling the situation through normal investigative and prosecutorial channels.

This is precisely what makes the propaganda so insidious—it takes genuine criminal behavior by a handful of individuals and transforms it into justification for military deployment against an entire city. The concrete-throwing incidents become “widespread violence.” The few blocks where confrontations occurred become “chaos consuming Los Angeles.” The handful of criminals become representative of all protesters.

The propagandists understand that dramatic images of real violence are far more effective than fabricated ones. A video of someone hurling concrete at police is genuinely disturbing and naturally generates strong emotional responses. But that same video, stripped of context about scale and containment, repeated endlessly across multiple platforms, creates the impression of systematic breakdown rather than isolated criminal behavior being addressed through normal legal processes.

The crucial element is decontextualization. Videos of specific incidents circulate without time stamps, location markers, or scale indicators. A thirty-second clip of one intersection becomes representative of an entire city. Social media algorithms, designed to maximize engagement, naturally boost content that triggers fear and outrage while burying anything that might provide proportion or context.

Mainstream media, trapped in its own engagement-driven incentives, amplifies rather than clarifies. Headlines speak of “widespread unrest” and “violence erupting across Los Angeles” without mentioning that we’re talking about incidents covering perhaps twenty square blocks in a city spanning over 500 square miles. The scale of the actual disturbances gets lost in the imperative to make everything sound dramatic and urgent.

The result is a population that believes they’re witnessing something far more serious than reality warrants. Americans in other states, consuming this curated content, develop the impression that Los Angeles is in the grip of systematic breakdown requiring extraordinary intervention. The manufactured crisis becomes indistinguishable from a real one in the minds of people who have no baseline for comparison.

This sets the stage for the propaganda’s true purpose: convincing Americans that military force is necessary to restore order. Once the impression of chaos has been established, military deployment becomes not just reasonable but obviously required. “Local law enforcement can’t handle it” becomes the refrain, even though local law enforcement was never actually overwhelmed and never requested federal assistance.

The genius of this propaganda operation lies in how it reframes the debate entirely. The question is no longer whether military deployment against civilians is constitutional or appropriate. The question becomes whether you support “law and order” or you support “chaos and violence.” Anyone questioning the use of federal troops gets cast as someone who doesn’t care about public safety or who actively supports destruction.

This false binary eliminates the possibility of reasonable middle ground. You cannot argue that the incidents were isolated without being accused of minimizing violence. You cannot question military deployment without being labeled an enemy of order itself. The propaganda creates a rhetorical trap where any opposition to extraordinary measures becomes evidence of extremism.

What makes this particularly insidious is how it exploits genuine human psychology. People naturally extrapolate from limited information, especially when that information triggers fear responses. A few dramatic images repeated endlessly create the impression of systematic breakdown even when the reality is far more contained. The mind fills in gaps with assumptions, and those assumptions become indistinguishable from observed fact.

The propagandists understand this perfectly. They know that context kills crisis, so they systematically strip away anything that might provide scale or proportion. They know that repetition creates reality, so they flood information channels with the same decontextualized clips. They know that fear overwhelms reason, so they frame everything in terms of immediate threat requiring immediate response.

Most Americans consuming this content have no direct experience of actual urban warfare or citywide riots. They have no baseline for distinguishing between genuine crisis and manufactured emergency. When they see curated clips of violence repeated endlessly across multiple platforms, their natural assumption is that this represents the broader reality rather than isolated incidents being amplified for political effect.

This psychological vulnerability becomes a political weapon. Once Americans believe they’re witnessing systematic breakdown, military deployment seems not just reasonable but obviously necessary. The idea that federal troops shouldn’t be deployed against civilians—a principle that was considered sacred just a few years ago—suddenly seems naive or even dangerous in the face of manufactured emergency.

The success of this operation should terrify anyone who understands how democracies die. We’re not just witnessing media manipulation or political spin—we’re watching the real-time manufacture of consent for military rule through carefully curated chaos. Each successful deployment makes the next one easier to justify. Each manufactured crisis normalizes extraordinary measures as ordinary responses.

This is exactly how authoritarian consolidation works in practice. You don’t announce that you’re ending civilian control over the military—you create conditions where military control seems like the only reasonable response to ongoing emergencies. You don’t eliminate constitutional protections in one dramatic gesture—you erode them gradually through crisis management that becomes permanent.

The precedent established in Los Angeles will not remain confined to Los Angeles. Once Americans accept that federal troops can be deployed against protesters whenever local incidents can be framed as widespread chaos, every future demonstration becomes a potential justification for military intervention. The threshold for extraordinary measures gets lower with each successful deployment.

Consider how this dynamic will operate in the future. Any protest that produces even isolated incidents of vandalism or confrontation can now be framed as requiring federal military response. The mere possibility of violence becomes sufficient justification for preemptive deployment. The distinction between peaceful demonstration and dangerous riot becomes whatever federal authorities claim it to be.

This represents a fundamental transformation in how power operates in American society. We’re moving from a system where military deployment against civilians requires extraordinary justification to one where such deployment becomes a routine response to political dissent. The change isn’t happening through constitutional amendment or legislative action—it’s happening through the gradual normalization of what was previously unthinkable.

The media’s role in this transformation cannot be understated. By treating manufactured crisis as genuine emergency, by amplifying decontextualized images without providing scale or proportion, by framing military deployment as reasonable response rather than constitutional violation, mainstream outlets become unwitting accomplices in their own irrelevance. When reporters present authoritarian power grabs as ordinary policy disagreements, they help normalize what should be shocking.

Perhaps most disturbing is how effectively this propaganda has convinced ordinary Americans to support what amounts to the militarization of domestic law enforcement. People who consider themselves patriots now applaud the deployment of Marines against American citizens. People who claim to defend constitutional principles now support the violation of fundamental restrictions on military power. The propaganda has made authoritarianism seem patriotic.

This cognitive dissonance reveals the true power of manufactured crisis. When people believe they’re facing genuine emergency, they willingly surrender protections they would normally defend. The Constitution becomes less important than immediate security. Constitutional principles become luxuries we can’t afford during times of crisis. The fact that the crisis is largely manufactured becomes irrelevant once the psychological impact takes hold.

We are witnessing the live construction of an authoritarian consensus through carefully orchestrated deception. A few broken windows and isolated acts of violence in Los Angeles became the justification for crossing a constitutional line that previous generations would have died to defend. And it worked precisely because most Americans have lost the ability to distinguish between genuine emergency and manufactured crisis.

The implications extend far beyond immigration enforcement or protest suppression. Once the principle is established that federal troops can be deployed whenever authorities claim local breakdown, there are no meaningful constraints on military power over civilian life. Every future crisis—economic, political, social—becomes a potential justification for extraordinary measures that gradually become ordinary.

Two plus two equals four. There are twenty-four hours in a day. And when a few isolated incidents can be transformed into justification for military deployment through propaganda alone, you’re no longer living in a constitutional republic—you’re living in a system where perception matters more than reality and manufactured crisis justifies unlimited power.

The center cannot hold when truth becomes whatever serves authority and crisis becomes whatever power claims it to be. We have crossed a line that will be very difficult to uncross, not because the precedent is legally binding but because the psychological transformation it represents may be irreversible.

The manufactured crisis succeeded. Americans now accept military deployment against civilians as normal and necessary. What comes next will be worse, because the machinery of deception has proven its effectiveness and the appetite for resistance has been systematically eroded through careful manipulation of fear.

The propaganda worked. And that should terrify every American who understands what we’ve just surrendered.

Mike Brock is a former tech exec who was on the leadership team at Block. Originally published at his Notes From the Circus.